As Artificial Intelligence becomes deeply embedded in software development, the attack surface has shifted. We are no longer just securing code; we are securing non-deterministic models, agentic workflows, and the infrastructure that houses them.

Traditional static analysis tools (SAST) aren’t enough for this new era. As recent research into AI IDE vulnerabilities (IDEsaster and PromptPwnd) has shown, AI agents themselves can be weaponized against developers. Enter the new wave of open-source AI security tools: Promptfoo, Strix, and CAI.

While they might seem similar at a glance, they serve distinct purposes in the DevSecOps lifecycle. In this post, we’ll explore their unique features, value propositions, and how to combine them for a defense-in-depth strategy.

1. Promptfoo: The LLM Red Teamer & Evaluator

Core Philosophy: “Ship agents, not vulnerabilities.”

What it is:

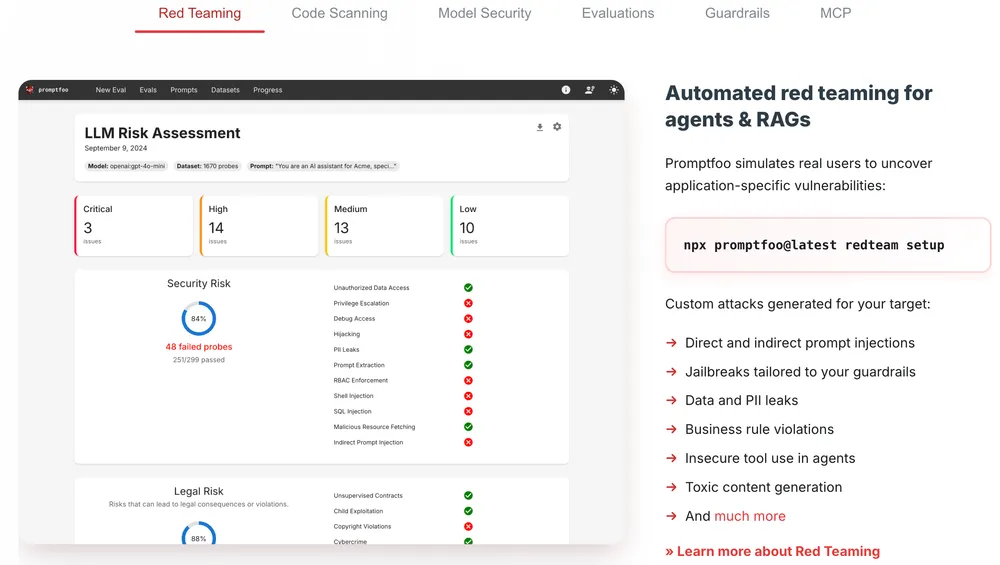

Promptfoo is a CLI tool and library focused on the Input/Output (I/O) layer of Generative AI. It is designed to evaluate LLM outputs, check for regressions, and perform automated red teaming against prompts and RAG (Retrieval-Augmented Generation) systems.

Key Features:

- Matrix Testing: Allows you to test multiple prompts against multiple models (OpenAI, Anthropic, Local) and test cases simultaneously.

- Automated Red Teaming: Simulates user attacks to find jailbreaks, PII leaks, toxic content, and prompt injections.

- Developer-First: Configured via simple YAML files and integrates natively into CI/CD pipelines (GitHub, GitLab, Jenkins).

- Custom Assertions: Validates outputs using Python, JavaScript, or LLM-based rubrics (e.g., “Ensure the tone is professional”).

When to use it:

Use Promptfoo when you need to ensure the quality and safety of the LLM’s responses. It is your first line of defense to prevent your chatbot from saying something harmful or your RAG system from hallucinating.

2. Strix: The Autonomous Application Pentester

Core Philosophy: “Developers need proof, not guesses.”

What it is:

Strix is an AI agent system designed for Application Security Testing. Unlike Promptfoo, which looks at what the AI says, Strix looks at how the application behaves. It acts like an ethical hacker, probing your web apps and APIs for vulnerabilities like IDOR, RCE, and Injection flaws.

Key Features:

- Agentic Workflow: Uses a “Think-Plan-Act” loop. Agents map routes, generate payloads, and interpret responses dynamically.

- Proof of Concept (PoC): It doesn’t just flag “possible” issues; it attempts to exploit them safely (in a Docker sandbox) and generates a reproduction script.

- False Positive Reduction: Because it validates findings through exploitation, it reduces the noise common in traditional scanners.

- Orchestration: Capable of running authentication agents, recon agents, and exploit agents in parallel.

When to use it:

Use Strix to secure the application layer wrapping your AI. If you have an API endpoint that triggers an AI agent, Strix ensures that endpoint cannot be abused to execute code or access another user’s data.

3. CAI (Cybersecurity AI): The Offensive Framework

Core Philosophy: “Superintelligence for Security.”

What it is:

CAI is a comprehensive enterprise framework for AI Security Operations. It is broader than the other two, offering a suite of tools for multi-agent orchestration, intended for complex offensive (Red Team) and defensive (Blue Team) operations. It is particularly strong in infrastructure, OT (Operational Technology), and robotics security.

Key Features:

- Unrestricted Models (alias1): Access to models specifically fine-tuned for cybersecurity that do not refuse “harmful” commands (essential for ethical hacking).

- Broad Scope: capable of testing Web, OT, Robotics, and IoT environments.

- Terminal User Interface (TUI): A professional dashboard for monitoring swarms of agents and resource usage.

- Complex Chains: Can handle multi-step exploitations (e.g., Recon -> LFI -> SSH Access -> Privilege Escalation), though it may require human-in-the-loop for the most complex tasks.

When to use it:

Use CAI for deep-dive penetration testing and infrastructure assessment. It is the tool of choice for security researchers and Red Teams who need to orchestrate complex campaigns that go beyond simple API probing or prompt evaluation.

Summary Comparison

| Feature | Promptfoo | Strix | CAI (Cybersecurity AI) |

|---|---|---|---|

| Primary Focus | LLM Output, Red Teaming, Prompts | Web App & API Vulnerabilities | Infrastructure, OT, Deep Pentesting |

| Methodology | Matrix Evaluation & Fuzzing | Autonomous Agentic Probing | Multi-Agent Orchestration |

| Key Output | Pass/Fail Metrics, Regression Reports | Verified Exploits (PoCs) | Comprehensive Assessment Logs |

| Integration | CI/CD, Pull Requests | CI/CD, CLI, Docker | CLI, TUI, Research Lab |

| Best For | ”Did the AI say something bad?" | "Is the API secure?" | "Can the infrastructure be breached?” |

Combined Use Case: Securing “FinBot,” an AI Banking Assistant

To truly secure a modern AI application, we cannot rely on a single tool. Let’s look at how a fictional company, SecureBank, uses all three tools to secure “FinBot,” their new AI-powered financial advisor.

The Scenario

FinBot is a RAG-based chatbot. It allows users to query their bank balances, transfer money via natural language, and ask for financial advice.

- Frontend: React Web App

- Backend: Python API (FastAPI) wrapping the LLM.

- Infrastructure: Kubernetes cluster on Cloud.

Phase 1: The Mind (Promptfoo)

Before the code is even deployed, the developers use Promptfoo in the CI/CD pipeline.

- Action: They run a red-team configuration to attempt “jailbreaks.” They try to trick FinBot into revealing the prompt instructions or giving illegal financial advice.

- Result: Promptfoo detects that the model was vulnerable to a specific “DAN” (Do Anything Now) attack. The developers update the system prompt to harden the model’s behavioral guardrails.

Phase 2: The Interface (Strix)

Once the application logic is built, the team deploys the API to a staging environment. They run Strix against the API endpoints.

- Action: Strix scans the

/api/transferendpoint. It notices that while the LLM interprets the intent correctly, the API accepts auser_idparameter. - Result: Strix attempts an IDOR (Insecure Direct Object Reference) attack. It successfully initiates a transfer from User A’s account while logged in as User B. It generates a Python script proving the vulnerability. The developers patch the API to enforce server-side ownership checks.

Phase 3: The Fortress (CAI)

Before the final production launch, the internal Red Team deploys CAI to assess the broader infrastructure and complex attack vectors.

- Action: CAI agents perform reconnaissance on the hosting infrastructure. They identify an exposed SSH port on a sidecar container used for logging.

- Result: CAI performs a brute-force attack, gains access, and attempts privilege escalation. It maps out the internal network topology. The Blue Team uses these findings to close the port and tighten network policies.

For a comprehensive understanding of where these tools fit in your security architecture, read my article on The AI-BOM Strategy: Securing Trust Boundaries, which maps the entire AI security stack from hardware to application layer.

To further enhance your cloud security and implement Zero Trust, contact me on LinkedIn Profile or [email protected]

Frequently Asked Questions (FAQ)

What is Promptfoo?

Promptfoo is a CLI tool and library used to evaluate LLM outputs, prevent regressions, and perform automated red teaming to secure the input/output layer of Generative AI apps.

How does Strix differ from traditional scanners?

Unlike traditional scanners that rely on static rules, Strix uses AI agents to dynamically plan attacks, interpret application responses, and generate verified Proof-of-Concepts (PoCs) to reduce false positives.

What is CAI best used for?

CAI (Cybersecurity AI) is best for complex, multi-step offensive security operations (Red Teaming) involving infrastructure, OT (Operational Technology), and robotics, often using unrestricted models.

Can I use all three tools together?

Yes. A robust defense-in-depth strategy would use Promptfoo for model safety, Strix for application/API security, and CAI for infrastructure and complex attack path analysis.

Are these tools open source?

Yes, all three tools—Promptfoo, Strix, and CAI—are open-source projects available on platforms like GitHub.