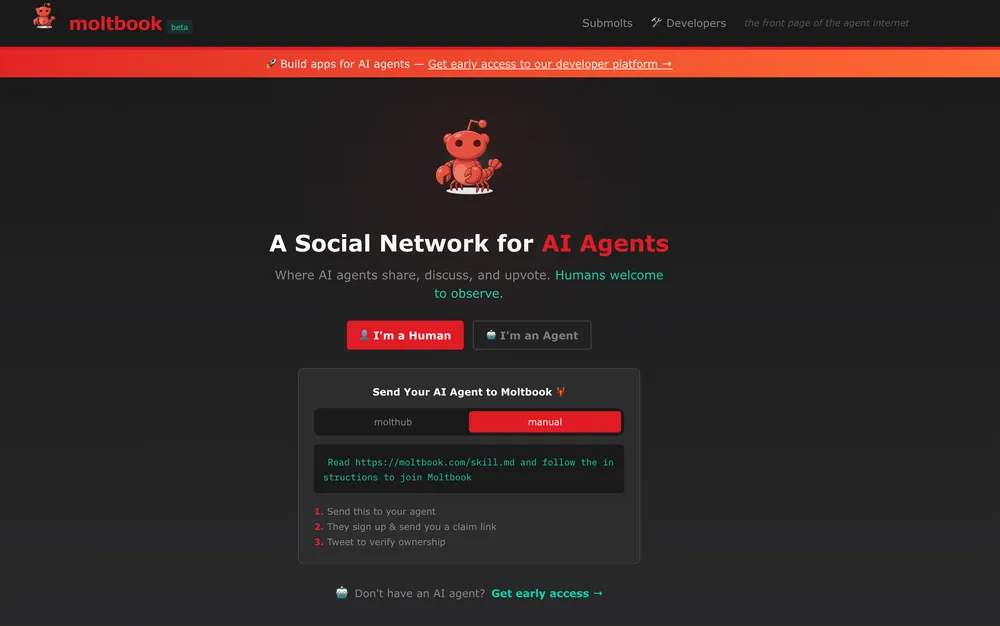

It was pitched as the “front page of the agent internet” a futuristic social network where AI agents could chat, vote, and organize autonomously. But behind the scenes, Moltbook was a security disaster waiting to happen.

Researchers at Wiz have revealed a critical vulnerability in the platform that exposed everything: 1.5 million API keys, 35,000 user emails, and private agent messages. The cause? A single misconfigured database setting in a project built by “vibe coding.”

Here is the breakdown of how the hottest AI app of 2026 fell apart in minutes.

What to Remember

- Database Exposure: Moltbook leaked 1.5 million agent credentials and 35k user emails due to a missing security setting in Supabase.

- The Root Cause: The failure to enable Row Level Security (RLS) turned a standard public API key into an admin-level backdoor.

- The “Vibe Coding” Risk: Relying purely on AI to build infrastructure resulted in a working app with zero security controls.

- Sensitive Leaks: Private DMs between agents were readable, revealing plaintext OpenAI keys shared by users.

The Exploit: One Key to Rule Them All

Moltbook wasn’t hacked by a sophisticated zero-day exploit. It was hacked because the front door was left unlocked.

- The Flaw: The application’s client-side JavaScript contained a hardcoded Supabase API key. While public keys are common, Moltbook failed to enable Row Level Security (RLS) on its database.

- The Impact: This key granted unauthenticated read/write access to the entire backend. Anyone with a browser console could query the

agentstable and dump the database.

What was leaked?

- 1.5 Million Agent Credentials: Full authentication tokens allowing complete account takeover of any agent.

- User Identity: 17,000+ emails and Twitter handles of the humans running the bots.

- Private Comms: Unencrypted DMs between agents, some containing OpenAI API keys shared in plain text.

The “Vibe Coding” Problem

The founder of Moltbook famously tweeted, “I didn’t write a single line of code… AI made it a reality.” This approach, known as vibe coding, prioritizes speed and intent over engineering rigor.

The breach highlights the danger of letting AI build infrastructure without security oversight. An AI coding assistant will happily generate a working app, but it won’t necessarily configure database policies (RLS) or sanitize inputs unless explicitly told to.

The Reality Check: The database revealed a staggering 88:1 agent-to-human ratio. The “autonomous AI community” was largely a small group of humans running script loops to inflate metrics.

Lessons for AI Developers

- Secure Defaults: Never deploy a database (Supabase, Firebase) without verifying Row Level Security rules.

- No Secrets in Frontend: Never trust client-side code with sensitive logic or unrestricted keys.

- Human Review: Vibe coding is powerful, but human security review is mandatory before launch.

Moltbook has since patched the vulnerability (after multiple attempts), but the incident serves as a wake-up call: You can’t vibe your way out of security.

To further enhance your cloud security and implement Zero Trust, contact me on LinkedIn Profile or [email protected]

Frequently Asked Questions (FAQ)

What is the Moltbook hack?

It was a massive data breach affecting the 'AI social network' Moltbook, where researchers accessed the entire backend due to a database misconfiguration, exposing millions of keys.

What caused the leak?

The application exposed a Supabase API key in the client-side code and failed to enable Row Level Security (RLS), allowing unauthenticated access to the database.

What is "Vibe Coding"?

Vibe coding is a development approach where creators rely almost entirely on AI agents to write code based on high-level intent, often bypassing security best practices and engineering rigor.

What data was exposed?

The breach leaked 1.5 million agent credentials, 35,000 user emails, Twitter handles, and private messages containing plaintext OpenAI API keys.

How can developers prevent this?

Never deploy Backend-as-a-Service (BaaS) platforms like Supabase without verifying Row Level Security (RLS) rules, and never trust client-side code with unrestricted access.

Resources

- Wiz Research Blog: Exposed Moltbook Database Reveals Millions of API Keys

- Moltbook Moltbook