Published

- 10 min read

The AI Engine's Exposed Wires: A Practitioner's Guide to MCP Security

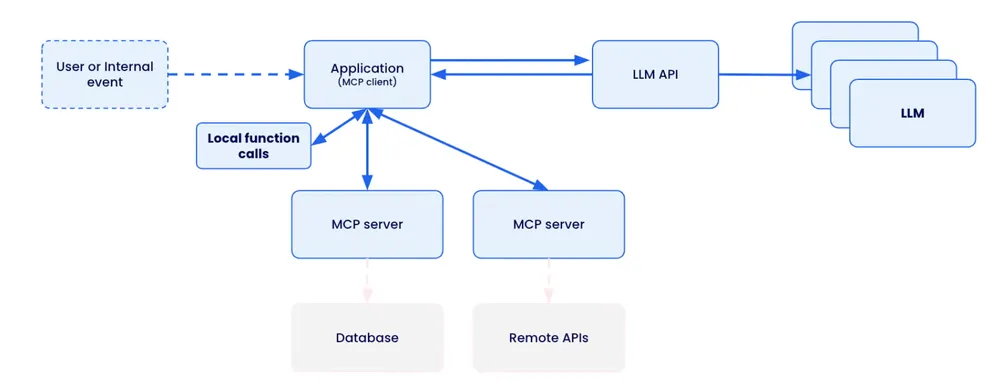

As developers and engineers, we are at the forefront of an incredible revolution. We are building the next generation of AI-powered applications, and the Model Context Protocol (MCP) has rapidly emerged as the essential framework for making it all work. It’s the powerful, standardized nervous system that allows our AI agents to connect to tools, access data, and perform complex tasks. It is the engine of the agentic era.

But in our rush to innovate, we have been building this powerful engine with exposed, live wires. We’ve been so focused on the incredible capabilities of the Large Language Model (LLM) that we’ve forgotten a fundamental truth: MCP is just software. And like all software, it is riddled with the same classic vulnerabilities we’ve been fighting for decades only now, the stakes are astronomically higher. This is particularly concerning as AI security threats continue to evolve in sophistication.

This isn’t a theoretical problem. Recent high-profile vulnerabilities, from command injections to full system takeovers, have proven that the MCP ecosystem is a new, fertile ground for attackers. Understanding these threats requires understanding broader cloud security risks and how they manifest in AI infrastructure. This is your technical, no-nonsense guide to understanding the six most critical MCP security threats, and how to start building a hardened, resilient defense.

Quick Navigation

The Core Problem: When “AI Magic” Meets Insecure APIs

At its heart, an MCP server is an API. It uses familiar transports like HTTP and JSON-RPC messages to allow an AI client (like an agent or a user-facing tool) to discover and call “tools” functions that the AI can use to interact with the world.

The problem, as the Docker security team has highlighted, is that many developers have been so captivated by the “AI magic” that they’ve overlooked the “insecure API” reality. They assume that because the calls are being initiated by a trusted LLM, the inputs don’t need the same rigorous validation as a traditional web application.

This is a catastrophic mistake. An attacker doesn’t care if the request comes from an LLM or a simple curl command. If your API is vulnerable, they will find and exploit it.

The 6 Critical MCP Security Flaws You Need to Fix Now

Let’s break down the most common and dangerous vulnerabilities that are currently plaguing the MCP ecosystem.

1. Unauthenticated and Unauthorized Access

The Threat: This is the most fundamental flaw. Many developers, especially when testing locally, run their MCP servers without any authentication, often binding them to 0.0.0.0, which exposes the server to the entire local network or even the internet. This violates core Zero Trust principles that should govern all infrastructure security.

The Impact: As seen with CVE-2025-49596 in the Anthropic MCP Inspector, a lack of authentication allows an attacker to use techniques like DNS rebinding or Cross-Site Request Forgery (CSRF) to send unauthenticated commands from a malicious website directly to the MCP server running on a developer’s machine, leading to Remote Code Execution (RCE). It turns a developer’s browser into a weapon against them.

2. Tool Input Vulnerabilities (SSRF, Command Injection, SQLi)

The Threat: Developers expose powerful “tools” to the LLM without properly sanitizing the inputs. A common cognitive bias is to assume the LLM will only provide benign arguments.

The Impact: This is where classic web vulnerabilities resurface with a vengeance.

- A

fetch_urltool without proper validation becomes a wide-open Server-Side Request Forgery (SSRF) vector. - A

run_scripttool that concatenates user input into a shell command becomes a classic OS Command Injection vulnerability. - A

query_databasetool that uses f-strings to build a query becomes a textbook SQL Injection flaw.

An attacker doesn’t need to craft a complex prompt to trick the LLM; they can often send a direct, malicious JSON-RPC request to the tool and achieve a full system compromise.

3. Insecure Deserialization of Model Files

The Threat: Many AI models are saved and distributed using serialization formats like Python’s pickle. The pickle format is notoriously insecure because it can be crafted to execute arbitrary code when it is deserialized (loaded).

The Impact: If your MCP server allows for the uploading of new models, an attacker can upload a malicious model.pkl file. The moment your server loads this “model,” the attacker’s code executes on your machine. This is a direct, high-impact RCE vector that is often overlooked in the MLOps pipeline.

4. The Supply Chain Nightmare: Malicious Dependencies

The Threat: The MCP ecosystem is a fast-moving open-source world. Developers are constantly pulling in new libraries and dependencies to add functionality. As we saw in the widespread NPM supply chain attacks, a single compromised maintainer account for a popular dependency can be used to inject malicious code into thousands of projects.

The Impact: An attacker can publish a malicious package that looks like a helpful MCP utility. When a developer installs it, a preinstall script can execute, stealing credentials, SSH keys, and cloud access tokens from the developer’s machine or the CI/CD pipeline. The Docker team has highlighted this as one of the most insidious “horror stories” of the modern supply chain.

5. Sensitive Information Exposure and Tool Poisoning

The Threat: Developers often expose sensitive information, such as API keys or internal URLs, through the tools or resources available on the MCP server. This can be unintentional, through verbose error messages, or intentional, as a “shortcut” for other tools to use.

The Impact: An attacker can use the server’s own discovery mechanisms (like the tools/list method) to find and exfiltrate these secrets. This can also lead to Tool Poisoning, where an attacker uses a stolen, low-privilege key to interact with a tool in a way that poisons its data or cache, causing it to return malicious results to legitimate users later on.

6. Insecure Management of the AI Lifecycle

The Threat: The security of an MCP server is not just about the server itself, but the entire AI lifecycle it manages. This includes the data used for training and fine-tuning, and the processes for updating and deploying models.

The Impact: If an attacker can find a way to inject malicious data into your training dataset (a Data Poisoning attack), they can create a subtle, persistent backdoor in your model. When the MCP server later deploys this poisoned model, the attacker can trigger the backdoor to achieve their objectives, and this type of compromise is incredibly difficult to detect.

The Practitioner’s Action Plan: A Defense-in-Depth Blueprint

Securing your MCP infrastructure requires a multi-layered approach that combines network security, API hygiene, and a Zero Trust mindset.

- 1. Isolate and Authenticate:

- Never bind to

0.0.0.0unless you have a very specific, firewalled reason. Default tolocalhostfor local development. - Place your remote MCP servers in a private VPC. Do not expose them directly to the internet. Use a secure gateway like a Google Cloud Load Balancer with Identity-Aware Proxy (IAP) to enforce strong, identity-based authentication for every single connection. This aligns with modern cloud threat detection best practices.

- Always use HTTPS. Encrypt all traffic between your clients and the MCP server using TLS.

- Never bind to

- 2. Sanitize Everything (Assume All Input is Malicious):

- Validate and Sanitize All Tool Inputs. Treat every argument passed to an MCP tool as if it came from a malicious user. Use allow-lists for characters, validate URLs against a strict pattern, and use parameterized queries for all database interactions.

- Use Secure Serialization Formats. Stop using

pickle. Use safer formats likesafetensorsfor distributing and loading your models.

- 3. Harden Your Supply Chain:

- Use a Private Registry. Do not pull packages directly from public repositories in your production builds. Use a private, vetted registry like JFrog Artifactory or Sonatype Nexus. This is part of broader software supply chain security strategies.

- Pin Your Dependencies. Use lock files (like

package-lock.jsonorpoetry.lock) to ensure you are always using specific, known-good versions of your dependencies. - Scan Everything. Integrate automated SCA and SAST scanning into your CI/CD pipeline to detect known vulnerabilities and malicious code in your dependencies and your own code.

- 4. Embrace Least Privilege:

- Run your MCP server as a low-privilege user. It should not run as root.

- Use a dedicated, least-privilege service account for your MCP server in the cloud, with a tightly scoped set of IAM permissions. This implements the Principle of Least Privilege in practice.

- Grant your AI agents access only to the specific, granular tools they need for a given task. Avoid creating overly permissive, generic tools.

- 5. The Future: A New Era of Trusted AI with Docker and E2B The long-term solution lies in building a fundamentally more secure foundation for AI. Initiatives like Docker’s partnership with E2B (e2b.dev) are pioneering this new frontier. They are building a future where AI agents can be given access to secure, sandboxed cloud environments where they can execute code and perform tasks without ever posing a risk to the underlying infrastructure. This model of “sandboxed execution” is the future of trusted AI.

Conclusion: From Insecure Engine to Hardened Fortress

The Model Context Protocol is a powerful and necessary engine for the agentic era. But for too long, we have been building it on a foundation of implicit trust and forgotten security principles. The recent wave of vulnerabilities has been a harsh but necessary wake-up call. This aligns with broader concerns about AI security risks in the enterprise.

As the builders of this new world, it is our responsibility to secure it. By treating every MCP server as a critical, internet-facing API, by assuming all input is hostile, and by building a defense-in-depth architecture that combines network isolation, strong authentication, and a secure supply chain, we can transform this insecure engine into a hardened fortress. The future of AI depends on it.

To further enhance your cloud security and implement Zero Trust, contact me on LinkedIn Profile or [email protected].

Key Takeaways

- Never bind to 0.0.0.0 - Default to localhost for local development

- Validate all inputs - Treat every argument as potentially malicious

- Use safetensors, not pickle - Safer model serialization format

- Implement Zero Trust - Enforce authentication on every connection

- Private registries - Vet all dependencies before production use

Frequently Asked Questions (FAQ)

What is the Model Context Protocol (MCP)?

MCP is an open-source framework that standardizes how AI agents and LLM applications connect to and interact with external tools and data sources. It acts as the API layer for the AI era.

What is the most common security flaw in MCP servers today?

The most common and dangerous flaw is a lack of proper authentication, often combined with insecure network exposure binding to `0.0.0.0`. This allows attackers to bypass security controls and send malicious commands directly to the server. Learn more about [modern cloud threat detection]/post/modern-cloud-threat-detection-playbook strategies.

My MCP server is only on my local machine. Am I still at risk?

Yes. As demonstrated by CVE-2025-49596, attackers can use browser-based attacks like DNS rebinding to send commands from a malicious website to a server running on `localhost`. Isolation is not guaranteed by running locally. Implementing [Zero Trust architecture]/post/your-practical-guide-to-building-a-zero-trust-architecture principles helps mitigate this risk.

How can I protect my organization from malicious dependencies in the MCP ecosystem?

The most effective strategy is to use a **private package registry** like JFrog Artifactory or Sonatype Nexus and to **pin your dependencies** using a lock file. This ensures you are only using specific, vetted versions of your open-source components.

Relevant Resource List

- Docker Blog: “MCP Security Issues Threatening AI Infrastructure”

- Docker Blog: “MCP Horror Stories: The Supply Chain Attack”

- Docker Blog: “MCP Security Explained”

- Docker Blog: “What’s Next for MCP Security?”

- Docker Blog: “Docker E2B: Building the Future of Trusted AI”