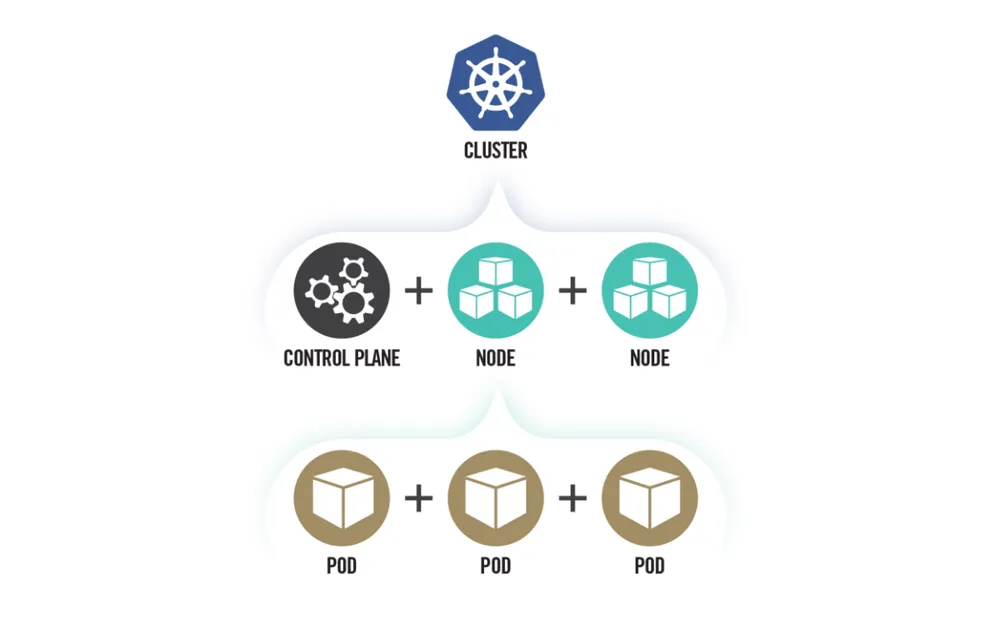

Kubernetes is the undisputed operating system of the cloud. It is the engine of modern innovation, a platform of incredible power, scalability, and agility. But beneath this powerful facade lies a dizzying level of complexity—a complexity that, if left unchecked, creates a sprawling and highly attractive attack surface for adversaries.

We often talk about “securing Kubernetes” in abstract terms, with a sea of best practices and vendor solutions. But what does a truly hardened cluster actually look like? Where should a CISO or security leader even begin?

Fortunately, we don’t have to guess. We can look to the authoritative Kubernetes Hardening Guide, jointly published by the U.S. National Security Agency (NSA) and CISA, as the definitive blueprint for locking down this critical infrastructure. This isn’t just another vendor whitepaper; it’s a battle-tested guide from the world’s foremost cybersecurity experts.

We have distilled this comprehensive, highly technical document into the top 10 most critical, high-impact actions your security and platform teams must take. This is your essential checklist for transforming your Kubernetes clusters from a potential liability into a hardened fortress.

Why This Guidance Matters: The Default State of Kubernetes is Too Permissive

The core challenge with Kubernetes is that its default configuration prioritizes functionality and ease-of-use over security. It is designed to “just work” out of the box. This means that, by default:

- Every workload can communicate with every other workload.

- Workloads can often run with more privileges than they need.

- The control plane might be exposed in ways you don’t expect.

Securing Kubernetes is the process of systematically removing this implicit trust and applying explicit, preventative controls.

The Top 10 Kubernetes Hardening Actions

This is your prioritized action plan. Each of these points represents a critical layer in a defense-in-depth strategy for your clusters.

1. Build Walls Between Your Workloads with Network Policies

The Risk: By default, every Pod in a Kubernetes cluster can communicate with every other Pod. It’s a flat, open network. If a single public-facing web server pod is compromised, it can immediately be used as a pivot point to attack critical internal services, like databases or authentication systems, within the same cluster.

Your Action Plan: You must implement NetworkPolicy resources to create firewall rules at the Pod level. Start with a default-deny policy for your critical namespaces, blocking all traffic, and then explicitly allow only the specific, necessary communication paths. For example, only “frontend” pods should be allowed to talk to “api-service” pods on port 8080. This single action drastically contains the blast radius of a compromise.

2. Lock Down Your Pods with Pod Security Standards

The Risk: A compromised container is bad. A compromised container that can escape to the underlying host node is a catastrophe. Many default container configurations allow for dangerous privilege escalation, such as running as the root user or mounting sensitive host directories.

Your Action Plan: You must enforce the official Pod Security Standards. These are predefined security policies (Privileged, Baseline, Restricted) applied at the namespace level. For all standard workloads, your goal should be to enforce the Restricted policy. This prevents containers from running as root, disables privilege escalation, and applies a host of other critical hardening measures by default.

3. Enforce Strict, Namespaced RBAC (Role-Based Access Control)

The Risk: The cluster-admin role is the “root” of your entire cluster. Granting this role too widely, especially to service accounts, is one of the most common and dangerous misconfigurations. A single compromised credential with cluster-admin privileges means an instant “game over.”

Your Action Plan: Rigorously adhere to the principle of least privilege. Avoid using the default cluster-admin role wherever possible. Instead, use namespaced Roles and RoleBindings to grant permissions only within the specific namespace where they are needed. A service account for an application in the production namespace should have zero permissions in the development namespace.

4. Scan and Secure Your Container Images

The Risk: Your running containers are only as secure as the images they were built from. Using base images from untrusted sources or failing to scan for vulnerabilities in your application dependencies is like building your fortress on a foundation of sand.

Your Action Plan: Implement a multi-step image hygiene process:

- Curate your sources. Use trusted and minimal base images from reputable registries, not the “latest” tag from a public source.

- Integrate a scanner into your CI/CD pipeline and your container registry (like Google’s Artifact Registry).

- Scan regularly. Continuously scan for vulnerabilities in both OS packages and application libraries, and establish a process to quickly rebuild and redeploy patched images.

5. Harden the Control Plane: The Brain of the Operation

The Risk: The Kubernetes control plane—especially the API Server—is the brain of your cluster. If an attacker can communicate with it, they can attempt to control the entire environment. Unnecessary exposure or insecure defaults are critical errors.

Your Action Plan:

- Disable anonymous authentication for the API Server by setting the

--anonymous-auth=falseflag. - Restrict network access to your control plane nodes. Use firewall rules to ensure only authorized administrative networks can communicate with the API Server.

- Encrypt your

etcddatabase at rest. This database stores the entire state of your cluster, including all configurations and secrets, and must be protected.

6. Treat Secrets Like Secrets: Use a Dedicated Secrets Manager

The Risk: Kubernetes Secrets, by default, are simply Base64-encoded strings stored in etcd. They are not truly encrypted in a way that protects them from anyone who gains access to the etcd database. Storing secrets in plaintext in environment variables is even worse.

Your Action Plan:

- Enable Encryption at Rest: At a minimum, enable encryption for secrets in

etcdusing a KMS provider. - Use an External Secrets Manager. For a higher level of security, integrate your cluster with a dedicated secrets management solution (like HashiCorp Vault or a cloud provider’s native service like GCP Secret Manager). Use a secrets store driver to mount secrets into Pods as in-memory volumes, which prevents them from being easily exposed via environment variables.

7. Isolate Your Worker Nodes

The Risk: Worker nodes are the underlying servers where your containers run. If an attacker achieves a container escape, their next target is the node itself. If all your workloads—from sensitive production databases to experimental dev applications—run on the same nodes, a single compromise can put everything at risk.

Your Action Plan: You must use node pools, taints, and tolerations to segment your workloads onto different sets of worker nodes. Your critical, internet-facing applications should run on a separate, hardened set of nodes from your internal backend services, creating another crucial layer of isolation.

8. Enable Comprehensive Audit Logging

The Risk: Without a detailed audit trail, a security incident in Kubernetes is a black box. If you don’t know who did what, when, and where, you have no hope of conducting a successful forensic investigation or preventing the same attack from happening again.

Your Action Plan: You must enable Kubernetes audit logging and ensure the logs are shipped to a secure, centralized logging platform for monitoring and analysis. Configure a detailed audit policy that logs all relevant events, especially all requests to the API Server that create, modify, or delete resources.

9. Prevent Self-Denial-of-Service with Resource Quotas

The Risk: A misbehaving or compromised application that consumes excessive CPU or memory can starve other critical workloads on the same node or even across the entire cluster, leading to a self-inflicted denial-of-service.

Your Action Plan: You must use ResourceQuotas and LimitRanges at the namespace level. This allows you to set hard limits on the total amount of CPU and memory that can be consumed by all Pods in a namespace. Furthermore, you must enforce the setting of requests and limits for each individual container to ensure predictable performance and prevent resource contention.

10. Stay Current, Stay Secure: Regularly Upgrade Kubernetes

The Risk: Kubernetes is a fast-moving open-source project. New versions are released approximately every four months, and each release includes critical security patches. Running an old, unsupported version of Kubernetes means you are exposed to a growing list of publicly known vulnerabilities.

Your Action Plan: You must maintain a regular upgrade cadence. Your organization needs a formal plan and process for regularly upgrading your clusters to a supported version of Kubernetes to ensure you are receiving timely security fixes.

Conclusion: From Complexity to Control

Securing Kubernetes can seem like a daunting task, but it doesn’t have to be an insurmountable one. By focusing on this authoritative guidance, you can move beyond the chaos and build a clear, prioritized roadmap.

This top 10 list is your starting point. It represents a defense-in-depth strategy that hardens your infrastructure at every layer—from the underlying nodes to the network, the control plane, and the workloads themselves. This isn’t just about compliance; it’s about building a resilient, secure, and trustworthy platform that can power your innovation without introducing unnecessary risk.

To further enhance your cloud security and implement Zero Trust, contact me on LinkedIn Profile or [email protected].

Frequently Asked Questions (FAQ)

Where should my team start? This list is a lot.

The most impactful starting points are typically **1) Enforcing Strict RBAC** to limit the potential for privilege escalation, **2) Implementing Network Policies** to contain the blast radius of a breach, and **3) Enforcing Pod Security Standards** to prevent container escapes.

Isn't my managed Kubernetes service (like GKE, EKS, or AKS) secure by default?

Managed services handle the security *of the control plane* for you, which is a huge benefit. However, *you* are still responsible for the security *in the cluster*. This includes everything on this list, such as securing your workloads, configuring RBAC, implementing network policies, and managing your container images. This is the shared responsibility model in action.

How is securing Kubernetes different from securing traditional VMs?

The biggest differences are the **orchestration layer** and the **ephemerality**. You have to secure the Kubernetes API Server and control plane itself, which doesn't exist in a VM world. Additionally, because containers are ephemeral and share a kernel, you need new methods for network segmentation `NetworkPolicy` and runtime security that are not dependent on static IP addresses or host-based agents.

What is the single biggest mistake organizations make with Kubernetes security?

The single biggest and most common mistake is **relying on the default, overly permissive settings**, especially with RBAC. Granting `cluster-admin` to service accounts or developers for "convenience" is the easiest way to create a critical security vulnerability.

Can I automate the enforcement of these hardening guidelines?

Yes, and you absolutely should. Many of these controls can and should be enforced using "policy-as-code" tools like OPA (Open Policy Agent) Gatekeeper or Kyverno. These tools can be integrated into your CI/CD pipeline to automatically block deployments that violate your security policies (e.g., a Pod trying to run as root).