You paste a database connection string into your AI coding assistant. It helps debug the error. Later, you realize that string contained your production password and it now lives in a third-party log somewhere.

This happens constantly. API keys in error messages, credentials in config files, internal IPs in stack traces all flowing to LLM providers. A 2024 GitGuardian study found 10+ million secrets leaked to public repos annually. The number shared with AI tools? Impossible to measure, but undoubtedly massive.

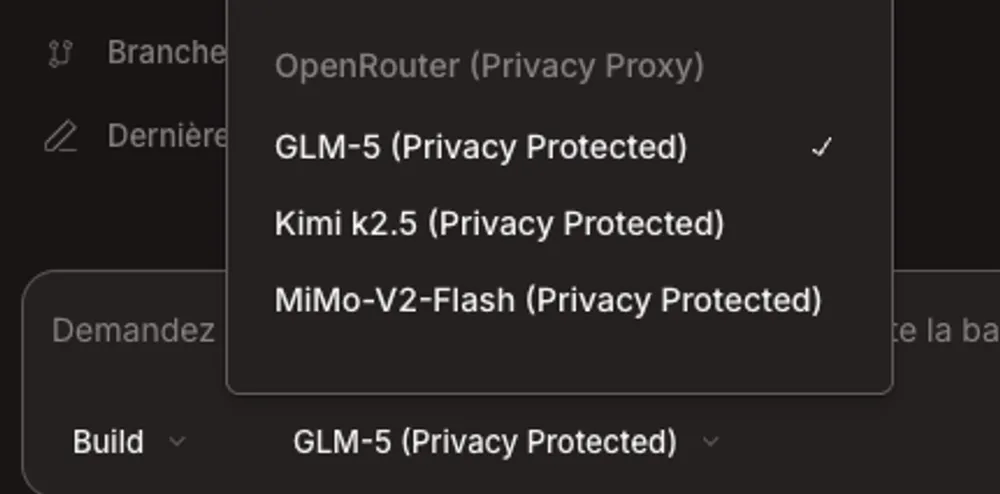

I built a solution: a local privacy proxy that sanitizes data before it leaves your machine. You get AI assistance without trusting anyone with your secrets.

Ask your preview access to the codebase by contacting me on LinkedIn Profile or emailing [email protected]. So far, the repository is private but I can plan to make it public in the future if any interest. Only configured for Opencode and OpenRouter, but the architecture can be adapted to any LLM provider.

Why This Matters

Most developers assume their prompts are ephemeral. They’re not:

- Training data: Some providers use prompts for model improvement

- Audit logs: Enterprise services log requests for debugging/billing

- Third-party chains: Proxies and middleware add trust dependencies

- Compliance: Sharing prod credentials may violate SOC 2, GDPR, or internal policies

The fix isn’t stopping AI usage it’s sanitizing data at the source.

How It Works

┌─────────────┐ ┌────────────────────────┐ ┌──────────────┐

│ OpenCode │────▶│ Local Privacy Proxy │────▶│ OpenRouter │

│ (IDE) │ │ http://localhost:8000 │ │ (LLM API) │

└─────────────┘ └────────────────────────┘ └──────────────┘

│

▼

┌─────────────────┐

│ 30+ Patterns │

│ • API keys │

│ • Emails │

│ • DB strings │

│ • IPs/Paths │

└─────────────────┘Key principle: Redaction happens locally. Zero trust in intermediate services.

The 30-Second Setup

# 1. Install dependencies

pip install -r requirements.txt

# 2. Configure

cp config/.env.example config/.env

# Edit config/.env → add your OPENROUTER_API_KEY

# 3. Start proxy

python src/proxy.pyConfigure OpenCode (opencode.json):

{

"provider": {

"openrouter-privacy": {

"npm": "@ai-sdk/openai-compatible",

"options": { "baseURL": "http://localhost:8000/v1" },

"models": {

"zhipu/glm-5": { "name": "GLM-5 (Privacy Protected)" },

"moonshotai/kimi-k2.5": { "name": "Kimi k2.5 (Privacy Protected)" }

}

}

}

}Use it:

opencode run "Review this code" --model zhipu/glm-5Redaction in Action

Before (What You Paste)

import os

# Database connection

DB_URL = "postgresql://admin:S3cr3tP@[email protected]:5432/app"

# API keys

OPENAI_API_KEY = "sk-abc123xyz789"

STRIPE_KEY = "sk_live_51ABC123DEF456"

# Contact info

ADMIN_EMAIL = "[email protected]"

ADMIN_PHONE = "+1-555-0123"

# Infrastructure

INTERNAL_API = "https://10.0.1.50:8080"

PROJECT_ROOT = "/home/john/projects/myapp"After (What the LLM Sees)

import os

# Database connection

DB_URL = "postgresql://[USER_REDACTED]:[PASSWORD_REDACTED]@[HOST_REDACTED]:[PORT_REDACTED]/[DB_NAME_REDACTED]"

# API keys

OPENAI_API_KEY = "[OPENAI_API_KEY_REDACTED]"

STRIPE_KEY = "[STRIPE_KEY_REDACTED]"

# Contact info

ADMIN_EMAIL = "[EMAIL_REDACTED]"

ADMIN_PHONE = "[PHONE_REDACTED]"

# Infrastructure

INTERNAL_API = "[IPV4_REDACTED]"

PROJECT_ROOT = "[HOME_PATH_REDACTED]"The LLM still provides value: It detects that you’re hardcoding credentials, suggests environment variables, recommends secrets management (Vault, AWS Secrets Manager), and spots that you’re exposing internal infrastructure. All without seeing the actual secrets.

The Pattern Engine

The proxy uses regex-based detection not semantic analysis. This is fast, deterministic, and covers 95% of leak scenarios:

# src/anonymizer.py - Example patterns

PATTERNS = {

"api_key_openai": (

r'sk-[a-zA-Z0-9]{20,}',

"[OPENAI_API_KEY_REDACTED]"

),

"email": (

r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b',

"[EMAIL_REDACTED]"

),

"db_connection": (

r'(postgresql|mysql|mongodb)://([^:]+):([^@]+)@([^:]+):(\d+)/([^\s]+)',

lambda m: f"{m.group(1)}://[USER_REDACTED]:[PASSWORD_REDACTED]@[HOST_REDACTED]:[PORT_REDACTED]/[DB_NAME_REDACTED]"

),

"ip_address": (

r'\b(?:[0-9]{1,3}\.){3}[0-9]{1,3}\b',

"[IPV4_REDACTED]"

),

# ... 26 more patterns

}Why regex over ML?

- Speed: < 50ms overhead per request

- Deterministic: Same input = same output, always

- Predictable: No “smart” false negatives

- Auditable: You can see exactly what gets caught

Privacy Layers

┌─────────────────────────────────────────────────────────────────────────────┐

│ Privacy-Preserving Architecture │

├─────────────────────────────────────────────────────────────────────────────┤

│ │

│ ┌──────────────┐ ┌──────────────┐ ┌──────────────┐ ┌──────────┐ │

│ │ LOCAL │───▶│ NETWORK │───▶│ OPENROUTER │───▶│ LLM │ │

│ │ │ │ │ │ │ │ │ │

│ │ Redaction │ │ Anonymized │ │ Zero Data │ │ ZDR │ │

│ │ before any │ │ data only │ │ Retention │ │ compliant│ │

│ │ network req │ │ transmitted │ │ (ZDR) mode │ │ contracts│ │

│ └──────────────┘ └──────────────┘ └──────────────┘ └──────────┘ │

│ │

└─────────────────────────────────────────────────────────────────────────────┘| Layer | Protection |

|---|---|

| Local | Redaction before any network request |

| Network | Only anonymized data transmitted |

| OpenRouter | Zero Data Retention (ZDR) mode |

| LLM Provider | ZDR-compliant contracts (Zhipu, Moonshot) |

Enable ZDR at openrouter.ai/settings:

- Toggle “Zero Data Retention” ON

- Disable “Input/Output Logging”

Verification

# Test redaction

python tests/test_redaction.py

# Check proxy health

curl http://localhost:8000/health

# View stats

curl http://localhost:8000/stats

# Monitor logs

tail -f logs/proxy.log | grep "Redacted"Architecture Decisions

1. Local-Only Operation

Earlier versions considered cloud-based pattern updates. That defeats the purpose. Updates happen via git pull, not API calls.

2. OpenAI-Compatible API

The proxy exposes /v1/chat/completions. Works with any tool supporting custom endpoints no SDK changes needed.

3. Preserve Context

Replace secrets with descriptive tags ([STRIPE_KEY_REDACTED] not [REDACTED]). LLMs understand the code structure.

4. Debug Logging Without visibility, you can’t trust the system. Debug mode shows full before/after comparison:

DEBUG_MODE=true python src/proxy.pyPerformance

| Metric | Value |

|---|---|

| Latency overhead | ~10-50ms per request |

| Context window | GLM-5 (128K), Kimi (200K) |

| CPU at idle | < 5% |

| CPU during request | 0% |

| Memory | ~50MB |

Conclusion

The proxy gives you freedom: use any LLM (including high-quality Chinese models) without worrying about data leakage. Your secrets and sensitive information never leave your machine. This article can be replicated for other LLM providers and even integrated into CI/CD pipelines, code review tools, and more.

To further enhance your cloud security and implement Zero Trust, contact me on LinkedIn Profile or [email protected]

Frequently Asked Questions (FAQ)

Does redaction confuse the AI?

No. LLMs analyze structure and patterns, not specific values. A redacted Stripe key is still recognizable as a payment API key.

Can I customize patterns?

Yes. You can edit `src/anonymizer.py` to add custom regex patterns and redaction tags.

Does this work with corporate proxies?

Yes. You can set `HTTP_PROXY` and `HTTPS_PROXY` environment variables in your configuration file.

Why Chinese LLMs specifically?

Models like GLM-5 and Kimi k2.5 match GPT-4 quality at a lower cost. However, due to differing data handling policies, this proxy allows you to leverage their power while maintaining data sovereignty.

Resources

- Quick Start: docs/QUICKSTART.md

- Architecture: docs/ARCHITECTURE.md

- OpenCode Setup: docs/OPENCODE_SETUP.md

- Troubleshooting: docs/TROUBLESHOOTING.md

- OpenRouter: openrouter.ai