Published

- 12 min read

Text-to-Malware: Fake AI Platforms as Cybercriminal Playgrounds

The explosion of Artificial Intelligence into the mainstream has been nothing short of revolutionary. Every day, millions are captivated by AI’s ability to generate art, compose music, and transform simple photos into dynamic videos. This excitement is palpable, but lurking in the shadows of this digital gold rush, cybercriminals have found a potent new lure: fake AI platforms. These deceptive sites, promising cutting-edge content generation, are increasingly becoming delivery mechanisms for sophisticated AI-themed malware, turning user enthusiasm into a dangerous infection vector.

Recent in-depth investigations by threat researchers at Morphisec and Mandiant (now part of Google Cloud) have pulled back the curtain on these alarming campaigns. What users expect to be a gateway to creative AI tools often turns into a trap, delivering potent malware designed to steal credentials, empty cryptocurrency wallets, and grant attackers deep control over infected systems. This isn’t just a theoretical threat; it’s happening now, at scale, and it’s crucial to understand how these schemes operate to protect yourself.

The Siren Song: How Users Are Lured by Fake AI Promises

The genius of these campaigns lies in their exploitation of current trends and the inherent trust users place in technology that seems cutting-edge and beneficial. Attackers are no longer solely relying on traditional phishing emails or cracked software sites. Instead, they’re building convincing, AI-themed platforms that often look and feel like legitimate services.

- The Bait: These platforms promise incredible AI capabilities, often focusing on video and image generation – tasks that are currently very popular and resource-intensive, making the offer of a “free” tool highly attractive.

- The Amplifier - Social Media: The primary distribution channels are legitimate social media platforms. Attackers create or compromise Facebook pages and LinkedIn accounts, running ad campaigns that can reach millions. Morphisec’s research highlighted Facebook groups boasting over 62,000 views on a single post, while Mandiant identified thousands of ads linked to these fake sites, collectively reaching over 2.3 million users in EU countries alone through Facebook.

- The Target Audience: This strategy targets a newer, often more trusting audience than typical malware campaigns aimed at gamers with pirated software. The new targets are creators, small businesses, and everyday users eager to explore AI for productivity or entertainment. They might be less technically savvy about verifying the authenticity of such platforms.

Once a user clicks on a malicious ad or a link in a social media group, they are redirected to a fraudulent website. These sites are designed to mimic the interface of genuine AI tools. Users are prompted to upload their images or videos, or enter text prompts, under the impression that they are interacting with a real AI for content generation or editing.

The Deceptive Download: From “Generated Content” to Malicious Payload

After the user uploads their content or provides a prompt and hits “generate,” a loading bar often appears, mimicking an AI model at work. After a few moments, a download link for the supposedly “processed” or “generated” content is presented. This is the critical point where the switch occurs.

Instead of the promised AI-generated video or image, the victim downloads a malicious payload, typically a ZIP archive. The filenames are craftily designed to deceive:

- Masquerading Extensions: The executable file within the archive often has a misleading name like

VideoDreamAI.mp4.exeorLumalabs_1926326251082123689-626.mp4<many spaces>.exe. The attackers exploit how Windows may display filenames, sometimes hiding the final.exeextension or pushing it out of view with numerous special whitespace characters (like the Braille Pattern Blank, Unicode U+2800). This makes the file appear as a harmless video or a generic file. - Fake Icons: The malicious executable might even use the default

.mp4video file icon to further enhance the disguise.

Upon execution, this initial file doesn’t deliver the AI creation; it kicks off a complex, multi-stage malware installation process.

Inside the Matrix: A Simplified Look at the Attack Chain

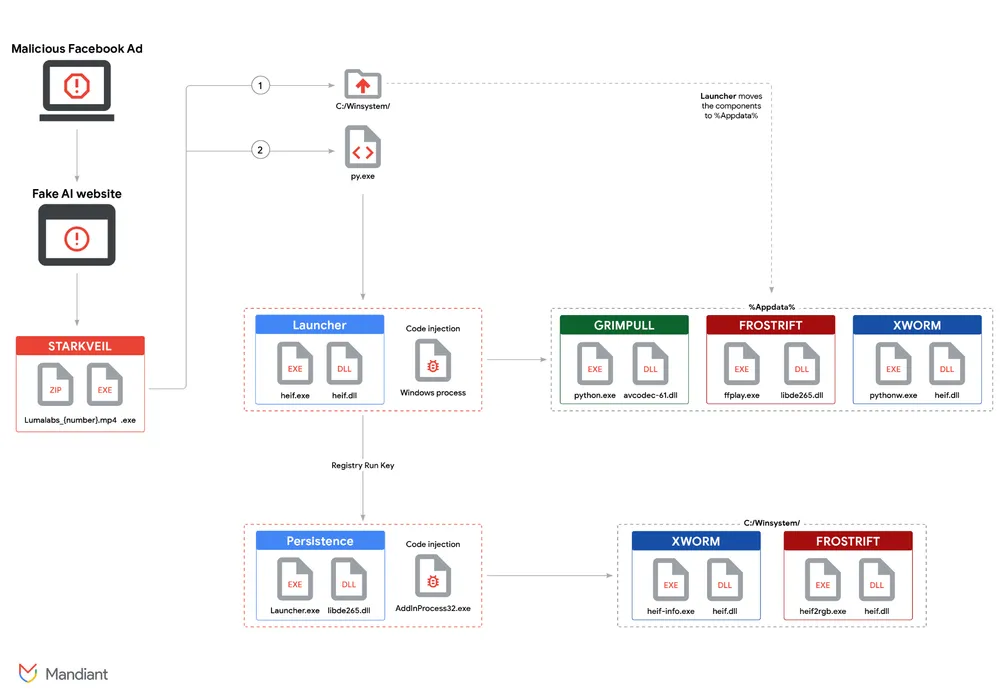

The malware delivered by these fake AI platforms is not a single, simple program. It’s a sophisticated, layered attack designed to evade detection and achieve multiple malicious objectives. Both Morphisec’s analysis of the Noodlophile Stealer and Mandiant’s investigation into the UNC6032 campaign (deploying STARKVEIL, XWORM, FROSTRIFT, and GRIMPULL malware) reveal intricate infection chains.

Here’s a simplified breakdown of what typically happens after that first deceptive click:

- The Initial Dropper: The downloaded

.exe(e.g.,VideoDreamAI.mp4.exeor the Rust-based STARKVEIL) is the first stage. A “dropper” is a type of malware designed to install other malicious programs.- Often, this initial binary is a repurposed version of a legitimate application (Morphisec notes the Noodlophile campaign used a modified version of CapCut, a video editing tool). This helps it appear less suspicious to some security solutions.

- Mandiant observed STARKVEIL sometimes requires being run twice. The first run extracts embedded files into a hidden directory (

C:\winsystem\); the second execution then proceeds with the infection.

- Evasion is Key: Attackers use numerous techniques to hide their malware:

- Obfuscation: Malicious scripts (like batch files disguised as Word documents, as seen with

Document.docxin the Noodlophile campaign) are often encoded or structured in confusing ways to prevent easy analysis by humans and security software. Python scripts might be bulked up with thousands of meaningless operations to break automated analysis tools. - Multi-Stage Loading: The full malware payload isn’t loaded at once. Components are often downloaded from remote servers or extracted from embedded archives only when needed.

- In-Memory Execution: Malicious code is sometimes loaded directly into the computer’s memory without writing it to the hard drive, making it harder for traditional antivirus to detect.

- DLL Side-Loading: Legitimate applications are tricked into loading malicious Dynamic Link Libraries (DLLs) that appear to be part of the benign software. The STARKVEIL campaign makes extensive use of this.

- Process Hollowing/Injection: Malicious code is injected into legitimate running processes to hide its activity.

- Anti-VM/Sandbox Checks: Some malware components, like GRIMPULL (identified by Mandiant), check if they are running in a virtual machine (VM) or sandbox environment (used by researchers for analysis). If detected, the malware may terminate itself to avoid scrutiny.

- Obfuscation: Malicious scripts (like batch files disguised as Word documents, as seen with

- The Core Malware Payloads – What They Do:

- Infostealers (e.g., Noodlophile, Python-based stealers in the UNC6032 campaign): This is a primary goal. These components are designed to steal:

- Browser Credentials: Saved usernames and passwords from web browsers.

- Cookies: Allowing attackers to potentially hijack active login sessions.

- Cryptocurrency Wallets: Targeting software wallets and browser extensions used for managing crypto.

- Sensitive Data: Other personal or financial information stored on the device.

- Facebook Information: Mandiant notes exfiltration of Facebook data via the Telegram API in UNC6032 compromises.

- Backdoors (e.g., XWORM, FROSTRIFT): These provide attackers with persistent remote access to the infected system. They can:

- Log keystrokes.

- Execute commands.

- Capture screenshots.

- Download and run additional malicious plugins or modules.

- Spread to USB drives (XWORM).

- FROSTRIFT even scans for 48 specific browser extensions related to password managers, authenticators, and digital wallets.

- Downloaders (e.g., GRIMPULL): This malware specializes in fetching further payloads from attacker-controlled servers (Command-and-Control, or C2 servers). GRIMPULL uses the Tor network to anonymize its C2 communication.

- Infostealers (e.g., Noodlophile, Python-based stealers in the UNC6032 campaign): This is a primary goal. These components are designed to steal:

- Establishing Persistence: Attackers want to ensure their malware runs every time the computer starts. This is often achieved by creating registry keys (e.g., an AutoRun key for XWORM and FROSTRIFT) that automatically launch the malware.

- Covert Communication: The stolen data and malware commands need to be exchanged with the attackers.

- Noodlophile Stealer was observed by Morphisec communicating with attackers through a Telegram bot, a covert channel for exfiltrating stolen information.

- XWORM and FROSTRIFT use custom binary protocols over TCP to connect to their C2 servers, with XWORM also using Telegram for victim notifications and data exfiltration.

The entire process, from the fake AI website to the deep system compromise, is a carefully orchestrated sequence of events, with each stage designed to build upon the last while evading detection.

The shadowy Figures Behind the “Text-to-Malware” Campaigns

Attributing cyberattacks can be challenging, but researchers have uncovered some clues:

- The Noodlophile Stealer developer, according to Morphisec, is likely of Vietnamese origin, based on language indicators and social media profiles. This developer was seen offering Noodlophile as part of Malware-as-a-Service (MaaS) schemes in cybercrime marketplaces.

- Google’s Threat Intelligence Group (GTIG) assesses that the UNC6032 campaign (distributing STARKVEIL and associated malware) has a Vietnam nexus.

These threat actors demonstrate a high level of operational security and adaptability. Mandiant observed that they constantly rotate the domains used in their social media ads, likely to avoid detection and account bans. New domains are often registered and appear in ads within days, or even the same day. Most ads are also short-lived, with new ones being created daily.

The Impact: Why These AI-Themed Attacks Are So Concerning

The weaponization of fake AI-themed websites represents a significant and evolving threat for several reasons:

- Exploiting Trust and Enthusiasm: AI is a hot topic, and many users are eager to experiment with new tools, sometimes lowering their guard regarding security.

- Broad Target Audience: Unlike highly technical exploits, these campaigns target a wide demographic, including individuals and businesses who may not have sophisticated cybersecurity defenses.

- Sophisticated Evasion: The multi-stage delivery and various obfuscation techniques make the malware difficult for traditional signature-based antivirus solutions to detect.

- Significant Data Theft Potential: The focus on infostealers means attackers can quickly gain access to a wealth of sensitive information, leading to financial loss, identity theft, and further compromise.

- Deep System Control: Backdoors give attackers persistent access, allowing for long-term spying, deployment of other malware (like ransomware), or using the infected machine in botnets.

- Rapid Evolution: Threat actors are constantly refining their tactics, changing domains, and updating malware payloads to stay ahead of defenders.

Staying Safe in an AI-Obsessed World: Your Defense Strategy

While these threats are sophisticated, a combination of vigilance and good security hygiene can significantly reduce your risk:

- Verify AI Platforms: Before using any new AI tool, especially free ones advertised on social media, do your research. Look for reviews from reputable sources, check the legitimacy of the website domain, and be wary of sites with a very recent registration date or generic appearance.

- Scrutinize Social Media Ads: Be highly skeptical of ads promising groundbreaking AI features for free. Check the authenticity of the page posting the ad.

- Beware of Downloads:

- Always be cautious about downloading executable files (.exe, .msi, .bat, .scr, etc.).

- Pay close attention to filenames. Look for multiple extensions (e.g.,

filename.mp4.exe). If your system is set to hide known extensions, change that setting to see the full filename. - If a site promises a video or image and delivers a ZIP file containing an executable, that’s a major red flag.

- Use Comprehensive Security Software: Rely on a reputable endpoint detection and response (EDR) solution or a next-generation antivirus (NGAV) that uses behavioral analysis and heuristics, not just signatures, to detect threats. Morphisec’s Automated Moving Target Defense (AMTD) technology, for instance, is designed to prevent such attacks at the earliest infiltration stage by reshaping the attack surface.

- Keep Everything Updated: Ensure your operating system, web browsers, and all applications (especially security software) are regularly updated to patch known vulnerabilities.

- Educate and Train: If you’re in an organization, educate employees about these evolving social engineering tactics and malware threats. Individual users should also stay informed.

- Strong, Unique Passwords and Multi-Factor Authentication (MFA): While infostealers can capture saved passwords, having strong, unique passwords for each account and enabling MFA wherever possible can limit the damage if credentials are compromised.

- Inspect URLs Carefully: Before clicking, hover over links to see the actual destination URL. Look for misspellings or unusual domain names.

Conclusion: The Double-Edged Sword of AI Enthusiasm

Artificial intelligence offers incredible potential, but its popularity has inevitably attracted the attention of cybercriminals. The rise of AI-themed malware distributed via fake platforms is a stark reminder that vigilance is paramount in our increasingly digital lives. These campaigns, exemplified by the Noodlophile Stealer and the UNC6032 activity, are not opportunistic, low-effort attacks; they are sophisticated operations leveraging current trends to ensnare a broad audience.

As threat actors continue to evolve their tactics, users and organizations must cultivate a healthy skepticism towards unsolicited offers, especially those that seem too good to be true. By understanding the lure, the attack mechanics, and the preventative measures, we can collectively work towards mitigating the risks posed by this “text-to-malware” phenomenon and ensure that our engagement with AI remains positive and secure. The digital landscape is constantly shifting, and our defenses must adapt with it.

To further enhance your cloud security and implement Zero Trust, contact me on LinkedIn Profile or [email protected].

Frequently Asked Questions (FAQ)

What is AI-themed malware?

AI-themed malware refers to malicious software distributed by cybercriminals who exploit the public's interest in Artificial Intelligence. They often create fake AI tool websites e.g., for video or image generation that trick users into downloading malware instead of legitimate AI-generated content.

Why are cybercriminals using fake AI platforms to spread malware?

Cybercriminals use fake AI platforms because AI is a popular and exciting new technology, attracting a large and diverse audience. Many users are eager to try free AI tools, making them susceptible to social engineering tactics that lead to malware downloads.

How is this type of malware typically distributed?

This malware is commonly distributed through malicious advertisements on social media platforms like Facebook and LinkedIn, as well as through posts in online groups. These ads or posts direct users to fraudulent websites masquerading as legitimate AI service providers.

When did these AI-themed malware campaigns start becoming prominent?

Research from firms like Mandiant indicates that significant campaigns, such as UNC6032, have been active since at least mid-2024, with continuous evolution and new domains appearing frequently.

Who is primarily targeted by these AI-themed malware attacks?

While anyone can be a target, these campaigns often aim at a broader audience than traditional malware, including content creators, small businesses, and individuals curious about AI tools, who may be less aware of the specific security risks.

Relevant Resource List:

- Morphisec Blog: “New Noodlophile Stealer Distributes Via Fake AI Video Generation Platforms” - Detailed analysis of the Noodlophile Stealer.

- Google Cloud Blog (Mandiant): “Text-to-Malware: How Cybercriminals Weaponize Fake AI-Themed Websites” - Comprehensive report on the UNC6032 campaign and associated malware.

- Meta’s Ad Library: Facebook Ad Library - Tool for transparency in advertising on Meta platforms.